Developmental AI: How Virtual Worlds Could Contribute

A presentation of the developmental AI paradigm,and how it could benefit from integration with modern VR technology.

In my previous articles, I articulated the case for going beyond deep learning in AI, and the importance of embodiment. There is no doubt that deep learning is an incredibly powerful tool that will play an important role in building intelligent machines. But deep learning is bound by its statistical nature. Statistical regression intrinsically lacks the ability to create causal inferences, it can only uncover correlations, because building causal inferences requires experimenting. Deep learning is capable to reduce the dimensionality of a classification problem, while preserving as much information as possible, which is remarkable, but it does not create new information.

We want to stress here the importance of letting the system do experiments, try and test variations or creative models against observation, to uncover causal structures and ultimately create new knowledge. In a nutshell, this is what evolution is doing, and this is also what the scientific method is doing in its core principles. More details about these ideas are available in my previous articles referenced above, and I also recommend the work of Judea Pearl about causality.

This observation about the importance of experiments leads to think that there should be a tight connection between strong AI and robotics: indeed, robots have a body and therefore are fundamentally capable to act in the world and build up causal models from interactions. From this perspective, it seems that a way to address the next step in AI could be bound by necessity to the use of some kind of robotic bodies.

There are however at least three major limitations with robots. The first obvious one is that they are expensive, and require a team to maintain them. Having worked in robotics for about 10 years, I can testify that robots can cost millions of dollars to build, fall apart before you know it, and usually are not totally fit to your particular needs (because costs lead to compromises and choices about CPU, cameras, actuators, battery, etc). Even with unlimited budgets, you can’t compress the time it takes to design, build and maintain robots, and off-the-shelves robots are no magic bullets, because you will need to customize them and at least maintain them.

The second limitation is that physical interactions with the world are slow and cannot be accelerated. There is a mechanical limit to how fast you can move stuff, see them fall, interact with them. Object dynamics have their intrinsic characteristic time. This, by the way, is the reason why super intelligent machines could not solve the challenge of finding a unifying theory for physics in about one second, as some proponents of the singularity claim it, because no matter how fast these machines think, they will need to test their theories and probably build things like solar system-wide particle accelerators, which no matter how smart you are takes a lot of physical time to do. But anyway, even without talking about theoretical physics, building up your representation of the world by interacting with it, as the program of developmental robotics advocates, would lead to incredibly long experiments; experiments that you would also likely have to run hundreds or thousands of times, making the problem even more acute. We are talking about years of experiments running here, at best.

The third limitation is more closely linked to developmental considerations. Recent studies suggest that the maturation of the baby’s body plays a central role in staging the complexity of sensorimotor learning in the early years. One example is the resolution of the eye: limited at birth, it builds up progressively in the first months, exposing gradually more and more complex signals to process to the brain, building up on previous stages to help refine corresponding sensorimotor models. Exposing an immature brain to the full complexity of a mature eye would probably not work, as the amount of information processing and categorization would be overwhelming. Think of a crystal that builds up progressively by adding layers on top of layers. The example of the eye is not the only one, the body itself is crucially evolving: the capacity to locomote is nonexistent at birth (for humans), which focuses the sensorimotor fine tuning job to the head/eye and then hand/eye couples only. Once this is mastered, the quest for walking can begin. One thing at a time.

Now getting back to robots: by design, their body is static and predefined, and while we can imagine disabling or downgrading certain parts and activating them over time, it is not always as easy. Some robots, like the iCub, have been entirely designed with the idea of reproducing a child morphology. Compare the iCub morphology (crawling on the floor, small limbs) to a « mature » equivalent like Atlas (walking, jumping, large limbs, heavy payload):

These limitations have driven many researchers to consider using robots in simulated environments. You don’t have to build the machine, you can just to program the robot body structure, which you can also evolve to simulate maturation, and of course you can potentially accelerate time if you have enough computing power to do so. But this approach has been historically considered a bad approach for at least two reasons, that we will review below and which I think might not hold any longer.

The first criticism of simulation for AI was the poverty of rendering fidelity vs the real world, also known as the « reality gap ». This is a problem because the system can use artificial regularities of the simulation to make its job easier. Take for example a blocky “perfect” world, with pure straight lines building up the environment. The AI system will start to learn and make use of these regularities, for example characterizing objects by the fact that they are defined by volumes constructed from perfectly plane surfaces (the « low poly » versions of objects). This would be a perfectly valid and useful characterization in this particular virtual world. Obviously, the ultimate goal of such simulation-trained AIs is to pick them out of their simulation at some point and transfer them to real robotic bodies, hopefully making them useful in the real world. However, as soon as you would switch the computer rendered images of its « eyes » to an actual camera, all the complexity, messiness and unpredictability of the real world would appear and potentially render it unable to use its former sensorimotor abstractions. Like any good developmental AI, it would have adapted to the peculiarities of its virtual environment and to make it work in the real world we would be brought back to square one trying to readapt it, involving slow robots, countless experiments and so on (it’s possible that the virtual world models could serve as a bootstrap, but this is far from guaranteed because the regularities picked up in a relatively abstract world can be irrelevant in a non-incremental way for the real world, for example using weird background regularities, aliasing clues, etc).

The above criticism is becoming increasingly irrelevant as computer graphics keep making progress, to the point that the visual quality of certain games or simulations is getting close to photorealism. See for example the work done on UnrealRox. Also, compare the two renderings below, one from the robot simulator Webots in the years 2000s, and the other one from a recent video game (Red Dead Redemption 2):

Recent improvements to rendering technologies include the use of photogrammetric or procedural textures, and the introduction of real-time ray tracing, which will push the realism further to unprecedented levels in the coming decade. We can also mention the ever increasing fidelity of physics simulation inside virtual worlds, even if this is still a bit insufficient at the moment.

The second main criticism is related to poverty (or plain absence) of social interactions. Most simulations (or games) feature “Non Playing Characters”, or NPCs, whose behaviors and expressions are extremely limited, usually following more or less rigid scripts, sometimes comically unaware of their surrounding environment. No meaningful interaction can occur with them, and the amount of non-verbal interaction possible is extremely limited. The players themselves are embodied into more or less rigid avatars with a very limited expressive repertoire. However, it is widely recognized in developmental psychology (and therefore in developmental AI/Robotics) that rich social interactions, especially early non verbal ones, are essential scaffolds to build up language, and later build the basis of a theory of mind that will help to model other actors intentions, beliefs and communication attempts. One of the tough challenges of a developmental system is to move from predicting objects behaviors and environment behavior, to trying to predict actors behaviors. The need for experimentation here is fulfilled first by non-verbal signals, and then by language, which acts as a speech-based experimental probe, just like motors were used to probe the sensorimotor space. Here again, a mere observational stance is not viable and an experimental approach is essential, but this of course requires a rich social environment to interact with.

We could hope that progress in AI will allow to create more convincing NPCs to overcome these limitations, but this would end up with a chicken and egg problem: how can we hope to developmentally train AIs using previously untrained (or less trained) AIs? There could possibly be a positive reinforcement loop here, so it could lead to some interesting results, but then we have no guarantee that the system would converge towards anything understandable or useful for us, and without scaffolding it might take a huge amount of time. More importantly, there is a clear dissymmetry of maturity/skills between the parents and the child in the development phase that seems to be crucial to properly bootstrap intelligence. So, who would play the role of the parents?

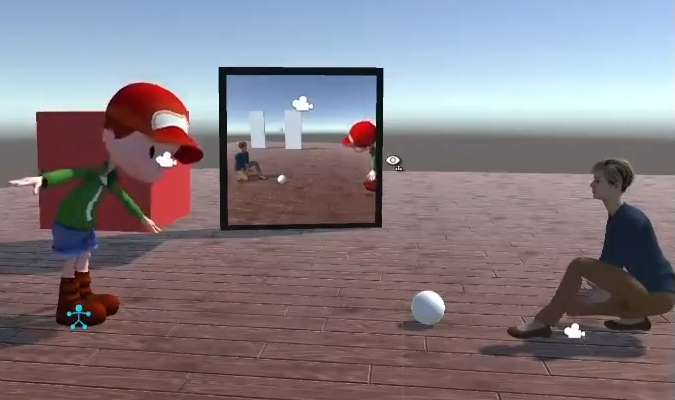

One promising alternative that we advocate here is to remove the NPCs altogether and replace them with real humans controlling “avatars” (controlled characters in the virtual world). Having human-controlled avatars is at the heart of the video game industry, so there is nothing particularly new here, but in our context however, this raises some new challenges:

- We must create avatars that have a wide repertoire of non-verbal social behaviors with an extremely nuanced range: facial expressions, body language, hand/arm coordination, gaze expression and gaze following. There is a bit of this today in characters emotes, but we are far from what would be required.

- We need much more advanced control devices to be able to capture the expressions of the user and map them to the avatar. Brain Computer Interfaces are making progress and this will probably be the way to go, but camera-based face expression recognition could also help in a shorter term.

- One or two caretakers will not be enough to train an AI in a virtual world, unless we fall back to the slowness of learning that we mentioned also for robots. To be able to speed up learning, the AI agent should have millions of participants to interact with, in order to parallelize and speed up learning. This of course raises the challenge of being able to somehow fusion learning done over several parallel experiences.

- The virtual world must be persistent so that progress made by the AI agents can accumulate and build up over time. The world must also be editable, non static, to allow for rich environmental interactions. This is currently still a tremendous technological challenge.

- The virtual world must be reasonably realistic in terms of rendering and physics simulation (but as we saw before, this is close to being already the case in most games)

No game or simulation to date covers the 5 points above. Point 1 (expressive avatars) depends on point 2 (advanced controllers), which is making good progress but is far from being mature. Point 5 is already good enough in our opinion. Point 3 and 4 could be covered by a new generation of truly massively multiplayers games/universes, among which Dual Universe, the game we are developing at Novaquark: Dual Universe features a giant universe made of several planets, where potentially millions of players can connect at the same time (point 3), and which is fully editable and persistent, using a voxel-based technology (point 4). For now, the graphics of Dual Universe are not at the top of the current state of the art, but we are catching up and making progress every month. In any case, the current graphics are already very convincing and most likely sufficient to train AI systems.

The addition of advanced avatar (point 1) and convincing controllers for them could therefore open the doors to experimenting with simulated robots interacting with players, with a research program based on developmental AI/Robotics, and involving millions of interactions with human agents. It would be possible to introduce a dedicated gameplay loop around the idea of “raising” your avatar (not replacing the current gameplay loops but offering an extra option for people interested). In a much more simplified way, this has already been tried and proved commercially successful in the past with Tamagotchi or games like Creatures, The Sims, etc. However, the grounded nature of this proposed experiment would make it way more profound, and therefore potentially more rewarding for the participants, while being of scientific interest.

To summarize, the proposed AI agents would have to exhibit the following properties:

- Fully grounded sensorimotor simulation (no cheating allowed, so for example no access to the scene graph). All sensors and motors should be in principle transferable to a real robot. Work by Kevin O’Regan or Alban Laflaquiere are especially interesting here.

- Growing body and sensorimotor scope, to emulate staged learning (see for example the work of James Law here)

- Agents should be driven by artificial curiosity as a primary motivation to learn (see the work for example of Pierre-Yves Oudeyer about an operational definition of artificial curiosity)

- Access to a rich physical and social environment. Beyond the proposed avatar expressiveness, a richer physical simulation than what is currently possible in Dual Universe would have to be partially implemented: we could introduce “physicalized bubbles” (or instances) inside which detached voxels volumes could be physicalized (falling on the ground, etc), as well as some form of fluid dynamics implemented, which is currently not possible at the scale of the whole world.

- Inside these instantiated physicalized bubbles, it should be possible to accelerate time in order to speed up the learning of sensorimotor grounding.

Getting back to the first comment about the current limits of deep learning, it is clear however that deep learning would be an essential tool in the engineering of the perception and categorization capabilities of those agents, but it is just a tool here, among others. The core questions that still need answering revolve around developmental pathways, semantic grounding, evolutionary dynamics, intrinsic motivation, theory of mind, joint attention, etc, and reconnect more broadly with a research program I articulated some years ago when I ran Softbank Robotics AI Lab in Paris.

Currently, Dual Universe is gearing up to launch its beta in summer 2020, so we are nowhere near starting to work on this research program. It is however a promising and exciting perspective for AI that could open up if the game becomes a success in itself. Much has been done already in using video games to train AIs, but I believe that the scale and social dimension introduced by a game like Dual Universe could bring new opportunities for the developmental AI community.

—

Addendum: A friend of mine pointed me to the excellent short story by Ted Chiang, “The Lifecycle Of Software Objects”, which is a wonderful illustration of the ideas above. I highly recommend reading it!